Live streaming has gone mainstream because of a perfect storm of factors – increasing internet penetration worldwide, bandwidth becoming cheaper, mobile devices becoming cheaper and significantly more powerful, and finally, the multitude of streaming and Over-The-Top (OTT) options people now have, whether at home or on-the-go.

Live streaming has become essential to media consumption today, but is just content delivery enough? No. The seamless, instantaneous nature of the viewing experience is just as essential. This is where low latency streaming comes into play.

What is low-latency streaming?

Traditional ‘live streaming’ is not live. There is a delay, or ‘latency’, usually a minute or two between when the content is captured and the earliest the viewers see it on their screen.

Low-latency video streams refer to transmitting video content over the internet in almost real-time, with minimal delay between capturing and displaying the content to the end user.

Low-latency streaming minimizes the camera-to-screen delay to a few seconds or less.

Why do we need low-latency streaming?

Essentially, the more interactive the experience, the easier it is for the content to resonate with the audience.

With low latency streaming, viewers can witness the action unfold in real-time – they are not just streaming content anymore; they’re as close to ‘live’ as it gets, with immediate reactions and interactions as it happens. Whether it's cheering for their favorite team during a sports event, staying up-to-date with breaking news as it happens, or engaging in live conversations with content creators, low latency streaming ensures viewers never miss out or get frustrated by only being able to see a critical event long after it has happened.

This creates a connection between the audience and the content like no other, making live streaming even more compelling and immersive overall.

Understanding latency: Standard, low, and ultra-low latencies

Before understanding low-latency video streaming, it is more important to understand what latency is and the types of it correctly.

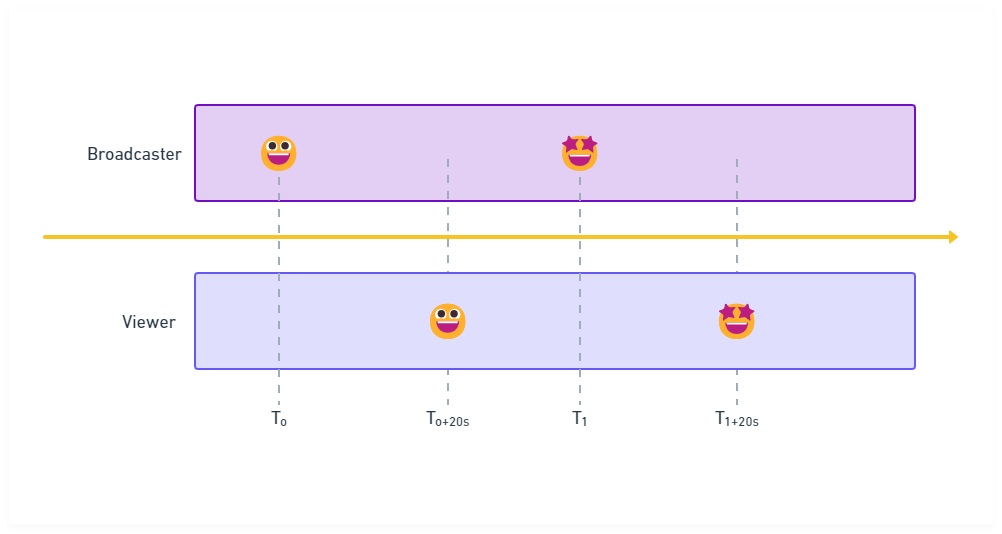

In the context of video streaming, latency is the delay between the capture of an event to when it is displayed to a user. Let’s explain this with an illustration.

From the above example, let’s assume an event was captured at T0 seconds and broadcasted to the user at T0+20s later. In this case, the latency will be 20 seconds.

Usually, this latency is measured in seconds and represents a crucial parameter when determining the quality of user experience in video streaming. Latency is divided into three types:

- Standard Latency

- Low Latency

- Ultra-low Latency

Let’s discuss the three briefly to understand the concept better.

Standard Latency

Traditional streaming without any modifications is a “standard” latency stream – it is generally acceptable when interactivity is not the top priority. For example, if you are watching a recorded lecture or a TV show, a standard latency will not cause any significant issues in terms of the intended user experience. Usually, this delay ranges from 6 to 18 seconds.

Because of the comparatively higher latency levels, it is used when interactivity is not the top priority. Usually, this means pre-recorded videos, such as streaming videos from OTT services, pre-recorded lectures, conferences, or virtual events that are entirely one-way and do not require interaction.

The standard live streaming protocols – HLS or HTTP Live Streaming and DASH(Dynamic Adaptive Streaming over HTTP) – are good enough to be used as is, for streams with standard latency. The reason for using standard latency in these use cases is that they use simple HTTP for delivery – meaning caching and efficient distribution by CDNs is a possibility. This makes live streaming scalable, stable, and secure but adds latency.

You can read more about HLS, DASH, and their differences here.

Low Latency

A stream is considered a low-latency video stream when the delay ranges between 2 to 6 seconds. This relatively short delay makes low-latency video streaming ideal for applications that require near real-time connectivity and interactivity.

Compared to traditional streaming (standard latency), low-latency streaming strikes a balance between offering a much more responsive viewing experience and minimizing buffering.

Usually, streaming services like YouTube live streams, Twitch, live sports broadcasts, concerts, webinars, etc. use low-latency video streaming. Viewers can participate in live chats, react to content, and interact with content creators or presenters almost instantaneously, fostering a sense of community.

To enable low-latency video streaming, extensions to the industry-standard DASH and HLS protocols – Low Latency DASH (LL-DASH) and Low Latency HLS (LL-HLS) – are commonly used. In the past, Real-Time Messaging Protocol (RTMP) served as another option, but with the deprecation of Adobe Flash and growing concerns about security and performance, the industry shifted towards LL-DASH and LL-HLS instead.

Ultra-low Latency

An Ultra-low latency video stream takes the concept of “low latency streaming” to the extreme, providing an almost instantaneous experience to the viewer, with a delay ranging between 0.2 seconds to 2 seconds only.

Achieving an ultra-low latency (ULL) stream is exceptionally challenging. The WebRTC and SRT protocols are used for streaming videos at ultra-low latency, but ULL streams involve much more than just the protocol – you need to control multiple factors from encoding to delivery, such as:

- A robust CDN infrastructure, strategically placed around the world to deliver content to users from the closest server

- Adopting WebRTC (Web Real-Time Communication) and Secure Reliable Transport (SRT) as your protocols of choice – WebRTC enables real-time peer-to-peer communication directly between web browsers and other applications without the need for a central server. SRT, on the other hand, ensures reliable and secure data transmission over unpredictable networks, ideal for live streaming scenarios.

- Utilizing modern low-latency codecs, such as WebM's VP9 or AV1, combined with hardware acceleration, and specialized video encoders/decoders.

- Breaking the video stream into smaller segments and sending them more frequently, reducing the time it takes for a video packet to travel from the server to the client

- Adopting Adaptive Bitrate Streaming (ABR) to dynamically adjust the video quality based on the user's network conditions, while minimizing buffering and latency.

Even though the delay is the lowest with ULL streaming, it requires careful setup and configuration. If not set up properly, it can affect the quality of your stream. Also, implementing protocols like WebRTC and SRT is complicated, demanding a deeper understanding of both web video technology and network infrastructure.

But for applications where ultra-low latency is actually crucial (think interactive auctions, and time-sensitive fintech use cases), the pros of an almost instantaneous connection far outweigh the cons.

Now that you have some idea about the different types of latencies, let’s discuss some more about low-latency video streaming and who needs it.

Who Needs Low Latency Video Streaming?

Low-latency streaming, with a delay ranging from 0.2 to 6 seconds, has a wide range of sectors, industries, and use cases where it could create a better experience for the viewer. Let’s take a look at some examples:

- OTT or Over-The-Top Media Services: While platforms like Netflix and Amazon Prime Video primarily deliver pre-recorded content, there are specific use cases within OTT that could benefit from low-latency streaming. For example, if the platform offers live events, such as concerts, or exclusive premieres, low-latency streaming can help by offering immediate audience feedback, live chats, and real-time engagement that enriches the experience.

- Education: Low-latency streaming is increasingly valuable in the field of education, especially in virtual classrooms and interactive learning environments. In 2023, the ed-tech and smart classroom industry was valued at 133 billion US dollars, expected to be 433 billion US dollars by 2030. One of the primary reasons for such growth is the ability to study from anywhere, from great teachers. When in such live classes, it is critical that students can ask questions, seek clarification, and participate in discussions as if they were physically present in the classroom. With low-latency video streaming and instant feedback, the experience becomes the same as sitting in a classroom.

- Live Streaming Events: Events like concerts, sports competitions, conferences, and virtual trade shows thrive on low-latency streaming to provide viewers with a seamless and dynamic experience. Almost all major launches worldwide host an online event to increase the hype and reach a wider audience. Here, real-time engagement, instant reactions, and live Q&A sessions contribute to the excitement and participation of the audience, making the event a success.

- Live Commerce: Low-latency streaming is crucial for live auctions as it ensures that bids and updates are communicated almost instantaneously, creating a fair and competitive environment for participants. It allows auctioneers to respond to bids promptly and keep the auction process fluid and engaging, and also enables the customers to ask questions, seek product details, and make informed bidding decisions in real-time.

- Telehealth: Remote consultations and medical video conferences benefit from low-latency streaming to enable smooth and timely communication between healthcare providers and patients. Near real-time interactions ensure accurate diagnoses, quick decision-making, and enhanced patient care, particularly in urgent or critical medical situations.

As you can see from the chart below published by the Grand View Research team, the market size of telehealth is steadily growing.

Now that you clearly understand what low-latency video streaming is and the use cases of it let’s discuss how to achieve this.

How to achieve low-latency video streaming?

To deliver video streaming with low latency efficiently, you’ll need a robust infrastructure, a clear understanding of content-specific requirements, and the right protocol.

What does “the right protocol” mean? Well. A widely used and reliable protocol for streaming is HLS or HTTP Live Streaming. HLS is an adaptive bitrate streaming protocol developed by Apple, widely used across various platforms and devices – and cloud-based digital asset management platforms like ImageKit make generating adaptive HLS streams a cinch with their intuitive, developer-friendly URL-based API that can generate manifests in near real-time, compatible with any HTML5 video player.

You can learn more about ImageKit’s Adaptive Bitrate Streaming and HLS capabilities here.

But HLS has inherent latency that makes it not the best choice for real-time applications, such as live sports and interactive events.

To understand these limitations, let’s look at how HLS works first.

The HLS protocol begins by segmenting the original video file into smaller chunks. These segments are delivered as separate files, encoded as a transport stream format with the .ts extension. These .ts files are stored on the HTTP server and then delivered to the user using the HTTP or Hypertext Transfer Protocol. On the client side, the video player must download each segment individually before playing anything.

Also, these segments are typically 6 seconds each, according to the latest specification. Segments being this long takes more time to download, leading to increased latency.

So if the target is low latency, these 6-second chunks need to be smaller. What if we could reduce the segments to 2-3 seconds instead? Alas, we can’t do that with conventional HLS, as that can result in frequent HTTP requests, causing higher latency again.

That’s where Apple’s low-latency extension to HLS (LL-HLS) comes into play.

How LL-HLS Achieves Low Latency Streaming

Live Streams

For live streaming, the LL-HLS protocol aims to achieve latencies comparable to traditional broadcast television, which is typically in the range of a few seconds, by making the following improvements:

- Reduced Segment Durations: LL-HLS reduces the segment duration significantly by using a different method of chunking video into segments – usually using CMAF – called Partial Segments. While the base HLS spec might have segment lengths of ~6 seconds, a Partial Segment might only be 200 milliseconds to minimize the time the player has to wait for the next segment.

- Partial Segment Updates: Instead of sending an entirely new segment, LL-HLS only sends the updated portion of the segment (“delta updates”), further reducing the amount of data transmitted and improving overall latency.

- Byte-Range Requests: LL-HLS uses byte-range requests, allowing the player to preload specific parts of a segment instead of fetching the whole segment. This reduces the amount of redundant data transfer and lowers latency.

On-Demand Streaming

LL-HLS’ improved techniques can also be applied to OTT/on-demand streaming to improve startup times and reduce buffering. In addition to the above three, the protocol also adds some new server tags that can help streaming of on-demand content:

- Predictive Fetching: LL-HLS spec introduces the EXT-X-PRELOAD-HINT tag, meaning any server publishing an LL-HLS stream will announce the likely location of the next chunk to the client. This means that for on-demand content (which are just pre-recorded video files), the client-side video player already knows the next media segment(s) and can pre-fetch them, minimizing the time it takes to start playing new segments as they become available.

- Rendition Reports: LL-HLS is an adaptive streaming protocol, meaning the client can switch video segments of varying qualities depending on the bandwidth available, quality of network connection, etc. To minimize the number of round-trips the client must make to the server for this, the EXT-X-RENDITION-REPORT tag is published by an LL-HLS server, providing a digest on all available qualities of chunks to the client.

As you can see, Low-Latency HLS (LL-HLS) serves as a compelling solution to address the inherent latency issues faced by traditional HLS streaming, whether for live streaming or on-demand content.

Conclusion

The increasing demand for OTT services and live-streaming video has enabled a new window of exploration. Recent technology advancements have allowed near real-time interaction through live streams.

In this article, we dove deep into the details of low-latency video streaming, explored the different types of streaming, the use case of low-latency video streaming and why you might need it, and also how low-latency video streaming can be achieved through LL-HLS, Apple’s official extension to the HLS protocol.