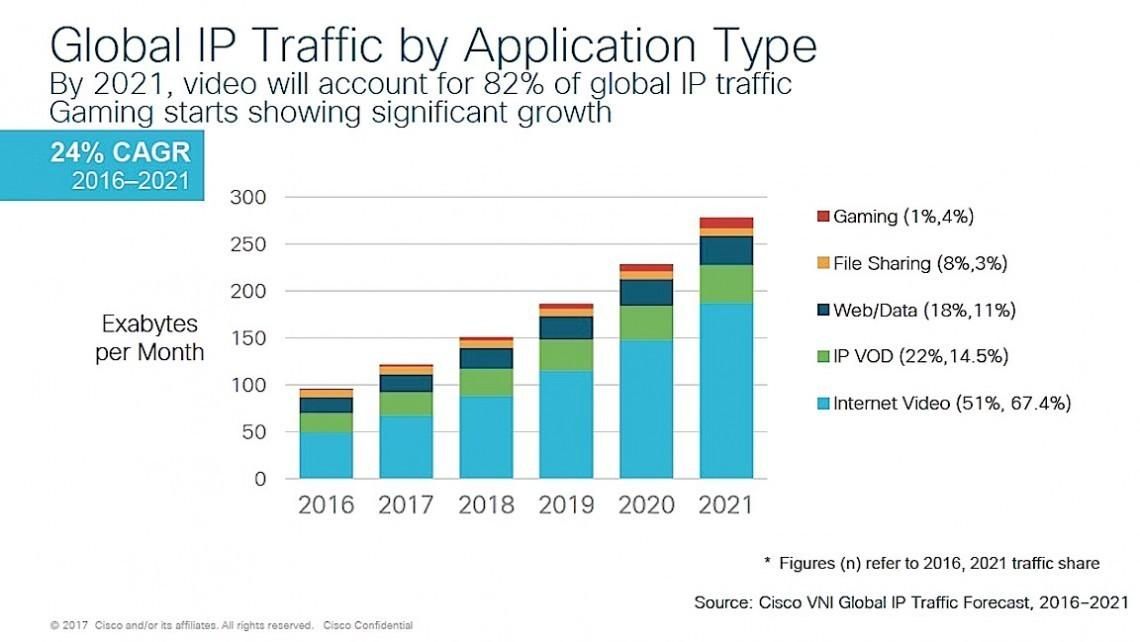

Streaming video (whether live or on-demand) has rapidly become the most sought-after content on the internet. Globally, it accounted for 82% of all consumer Internet traffic as of 2022[1], and the reasons are obvious – widespread availability of powerful mobile devices, combined with the fact that video is a highly engaging medium that can convey information quickly and effectively, compared to other content formats.

Any and all improvement in technology that makes the web faster and richer, ultimately makes streaming video a more attractive – and accessible – option.

But despite its popularity, video streaming is not without its challenges. Some common ones include:

- Buffering: When the video playback is delayed or interrupted due to slow internet speed, network congestion, or issues with the streaming server, leading to frustration and a poor viewing experience.

- Poor video quality: Reduced buffering isn’t the only metric for a good user experience. The video might play smoothly, but still be perceived as a poor quality stream if low bandwidth and network congestion lead to the stream being either bitrate starved, or cause compression artifacts. This detracts from the viewing experience and reduces engagement.

- Latency: The delay between the video capture and its display on the viewer's device, perceived as a lag in real-time video streaming. This lag can be caused by everything from network congestion, to choice of protocol, to the encoding/decoding process, and is especially problematic for real-time or low-latency streaming needs, such as live events.

- Compatibility: Video streaming is affected by compatibility issues, such as outdated, legacy hardware or browsers, or the video player being used for streaming, itself.

- Delivery pipeline: Delivering high-quality video content to a global audience is difficult. It’s not just a matter of encoding high-quality video and streaming it from a server. Server/Client design patterns need to be taken into consideration, and this communication can quickly become infeasible due to distance, server capacity being reached, and network congestion.

It’s fair to say that video makes the world go ‘round. But these challenges, if glossed over, can hold streaming video back from ever being viable. With Adaptive Bitrate Streaming (ABR) and the many protocols that implement it (like MPEG-DASH and HLS) and standardize the technology, it has become easier for content creators and businesses to overcome these challenges, and deliver high-quality video content to a global audience.

In this post, we’ll cover these streaming protocols, the underlying rules, standards, and technologies that govern such an adaptive transmission of video content over the internet (real-time, or on-demand), and then talk about the two main such protocols – MPEG-DASH and HLS – how they differ, and which one you should use.But before that, let’s quickly explain the technology that these protocols are trying to implement (and standardize) in their own way – Adaptive Bitrate Streaming (ABR).

What is Adaptive Bitrate Streaming (ABR)?

We’ve looked at the problems with streaming video, and now let’s look at a solution. Adaptive Bitrate Streaming is a technology that can address many of the challenges associated with streaming video – by transcoding and/or adjusting the quality of a video stream on-the-fly, based on the user’s bandwidth and device.

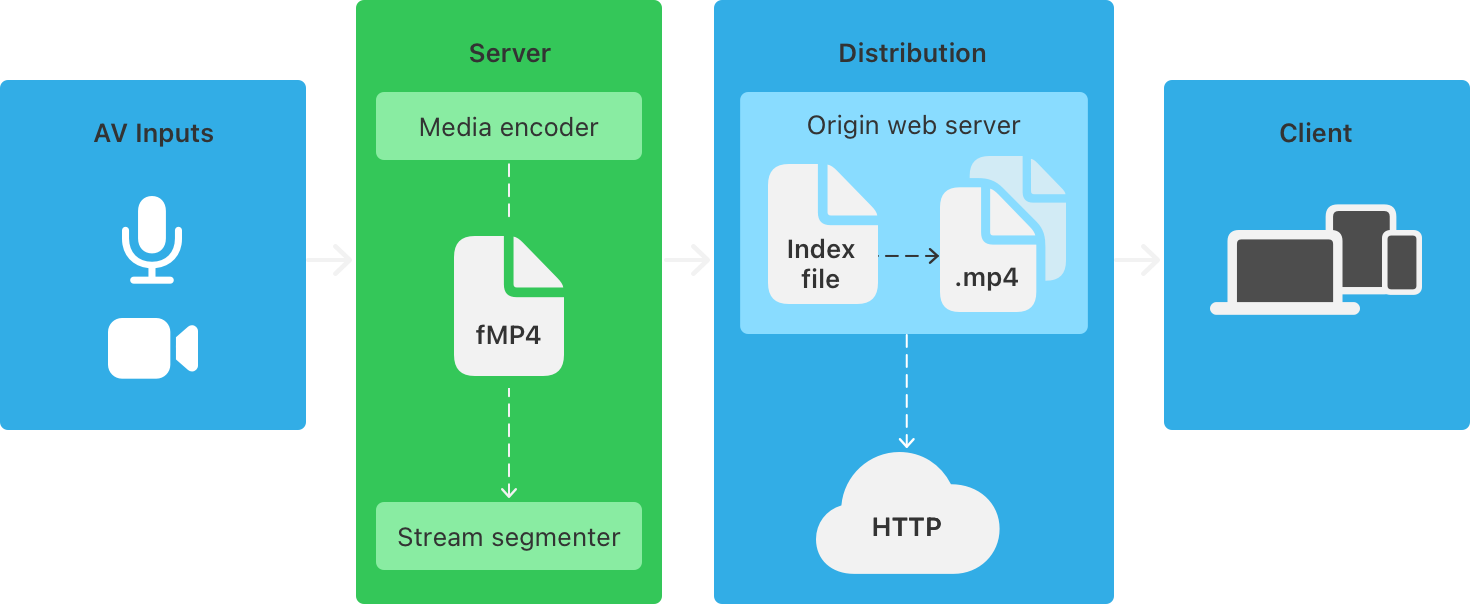

ABR’s approach – splitting a video into segments, encoding each at different bitrates, and having the Client-side player monitor network quality and request ideal quality variants from the Server – allows for smooth video playback without device incompatibility, or buffering and stuttering, even when internet speeds fluctuate.

The two most popular protocols that implement this technology are Apple’s HLS, and MPEG’s DASH.

What is HLS (HTTP Live Streaming)?

HTTP Live Streaming (HLS) is an adaptive bitrate streaming protocol developed by Apple. It was originally used for streaming live and on-demand video content over HTTP to iOS devices, Apple TV, and macOS computers, but is now platform agnostic.

HLS works by breaking down video content into small segments and creating multiple versions of each segment encoded at different bitrates for a given resolution. This allows the Client-side video player to dynamically request between different quality levels based on the viewer's internet speed and device capabilities while still keeping the resolution unchanged, ensuring that the viewer is always provided with the best possible experience.

Pros and Cons of HLS

Let's take a quick look at the pros first.

✅ High Scalability: HLS uses standard HTTP servers, which means that it can be easily integrated with existing web infrastructure, making it cacheable and scalable using CDNs. Also, HLS supports both live and on-demand video streaming, allowing content providers to deliver video content in real-time or pre-recorded formats.

✅ High Compatibility: HLS is natively supported by Apple's devices such as iPhone, iPad, Apple TV, and macOS devices, most mobile browsers, some third-party devices such as smart TVs and set-top boxes, and, finally, on almost all modern web browsers with the Media Source Extensions API, and all popular HTML5 based players (Flowplayer, Video.js, JW Player, etc.)

✅ Wide Range of Features: HLS supports multiple audio tracks, subtitles, closed captions, and encryption and authentication. This makes it an ideal choice for delivering video content to a global audience, as it allows content providers to offer secure streams with various language options, and accessibility features such as subtitles and closed captions for the hearing impaired.

There are still more pros to HLS, but these are the major ones. However, there are also a handful of cons to the video streaming protocol that you should be aware of.

Cons

❌ Latency: Some design choices for HLS – keyframe interval, packet size, and buffer requirement (HLS requires larger segments of 6 seconds each, but also requires 3 segments to be queued up in its buffer before it can play anything at all)[1] adds up to a minimum of 18-20 seconds of added latency by default. This, combined with further added seconds of latency if using a CDN for scalability, make it not the best choice if the use case is time-sensitive, and needs true low-latency streams (sports events, live auctions, etc.)

❌ Limited Codec Support: Apple’s specification for HLS is extremely rigid, and requires the use of only H.264 and H.265 (HEVC) for video, and only AAC, MP3, AC3, E-AC3, or HE-AAC for audio. It’s understandable that Apple would want to optimize the specification for supporting its entire family of devices and browsers, but this means there’s no scope for using modern, advanced, more efficient codecs like VP9, AV1, Opus, etc.

❌ Playback only, no publishing: While something like WebRTC allows for both publishing and playback of video streams directly from a browser, HLS only supports the latter – playback. Therefore, if you want to actually publish a live video stream using HLS from a browser, you need to use other tools or protocols to do so, such as Adobe’s proprietary RTMP (Real-Time Messaging Protocol) or the open source SRT (Secure Reliable Transport).

Ideal use Cases for HLS

The HTTP Live Streaming (HLS) protocol is ideal for a variety of streaming use cases, including, but not limited to:

- Video-on-demand: HLS was built to prioritize multiple streams with multiple quality choices, not latency. This makes it ideal for delivering on-demand video content which could use multiple quality choices and is not time-sensitive, such as traditional, pre-recorded content – movies and TV shows.

- Multiplatform Apps: HLS is supported on pretty much every platform – and also natively on Apple devices (Safari and iOS) which don’t support other protocols. This makes it ideal for apps and websites that need to support multiple platforms including Apple devices as a business requirement.

- Upgrading legacy software: Since HLS can work with existing HTTP servers, updating a legacy app to gain the advantages of Adaptive Bitrate Streaming will not require new infrastructure, or overhaul of existing server infrastructure.

What is MPEG-DASH?

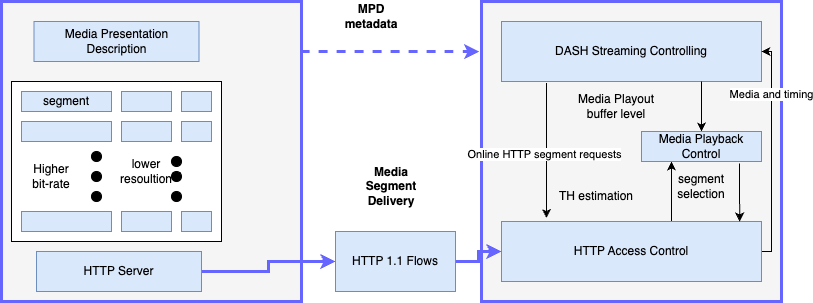

MPEG-DASH (Dynamic Adaptive Streaming over HTTP) is an open-standard adaptive bitrate streaming protocol that was developed by the Moving Picture Experts Group (MPEG).

Like other adaptive streaming protocols, MPEG-DASH also breaks down a video into small segments that can be transmitted over HTTP. However, implementation is what sets MPEG-DASH apart:

- MPEG-DASH uses Chunked Transfer Encoding, meaning segments can be sent to the client as soon as they are available, rather than waiting for the entire segment to be encoded and packaged before sending it. DASH also does not prescribe a minimum number of segments to be queued before any playback can begin. This results in much lower latency.

- MPEG-DASH is codec agnostic. This means streams can have potentially higher video quality (if using an advanced codec like VP8/VP9). This comes at the expense of flexibility, however, as DASH only allows a single stream with a particular bitrate for a given resolution, compared to HLS which lets you have multiple streams with different bitrates for a given resolution.

Pros and Cons of MPEG-DASH

Let’s take a closer look at the pros and cons of using MPEG-DASH for video streaming. First, the pros.

Pros

✅ Reduced Costs: MPEG-DASH is an open standard with no strict codec requirement. Meaning royalty free codecs like VP8/VP9 for video, and Opus for audio can be used, ensuring no licensing fees are ever needed to implement DASH streams.

✅ Customizable: MPEG-DASH is codec agnostic for both video and audio, meaning content creators/businesses can fine tune their streams for higher quality, or even faster, more reliable streams, based on the codec choice.

✅ Ideal for low-latency streams: The use of Chunked Transfer Encoding combined with no strict restrictions on a minimum segment size (or buffer) makes MPEG-DASH far more suitable for low-latency use-cases than HLS. MPEG-DASH also supports the CMAF (Common Media Application Format), which is a container format that supports different configurations for both low-latency streaming as well as standard streaming, without the need to encode and store multiple copies of the same content. This allows MPEG DASH to deliver low-latency streams with less buffering and a faster startup time, while still minimizing space requirements.

Cons

❌ Lack of standards: MPEG-DASH being an open-standard protocol is both a blessing and a curse. While there are no licensing fees to use it, it also does not prescribe standards for encoding, segment length and how to create them, etc. Each provider is free to implement their own solution, which makes customized implementations possible, but also makes the developer experience inconsistent for anyone getting into its ecosystem.

❌ First-Mile Delivery: While MPEG-DASH is great for delivery to viewer devices (the “last mile delivery”), using MPEG-DASH as an ingest protocol (capturing and pushing a stream to a server – “the first-mile delivery”) introduces a large amount of latency. A better strategy would be to encode the live video feed with RTMP/WebRTC to a server (that might have a CDN connected), and finally, perform the last-mile delivery to users using MPEG-DASH.

❌ Compatibility concerns: While MPEG-DASH was created to be platform agnostic, unfortunately, Apple products still do not support it. Also, native support for MPEG-DASH is still lacking in browsers, and it needs third-party HTML5-based players and the Media Source Extensions API support to play its streams.

Ideal Use Cases for MPEG-DASH

Here are some of the most common use cases for MPEG-DASH:

- Video Streaming Services: Widely used by video streaming services such as Netflix, Hulu, YouTube, and Amazon Prime Video to deliver high-quality video content to their subscribers using adaptive streaming.

- Live Streaming: Also ideal for live streaming events using adaptive streaming. Support for true low-latency streaming provides a seamless live experience to viewers.

- Streaming 360° videos: If you need to stream immersive 360° videos, the MPEG-DASH spec provides this functionality out of the box, leveraging the MPEG’s omnidirectional media format (OMAF) for streaming 360° content.

- Security Surveillance: Delivering live video feeds from security cameras over the internet, MPEG-DASH ensures real-time delivery with low latency and customizable quality. This makes it an excellent choice for security surveillance applications.

HLS vs. MPEG-DASH: How are they different?

While both protocols achieve the same goal, they have some significant differences in their approach and implementation.

Here are some of the key differences between HLS and MPEG-DASH:

| Differentiator | HLS | MPEG-DASH |

|---|---|---|

| Licensing | Proprietary; Apple-developed streaming protocol, with no official standardization outside of Apple’s requirements. | Open standard; built by MPEG, with ISO/IEC 23009-1:2012 standardization. |

| Compatibility | Supported on pretty much every platform and browser (with Media Source Extensions API for non-native support), with native support for most mobile devices, not just those in the Apple ecosystem. Check browser compatibility here. | Also supported on any platform and browser with the Media Source Extensions API, except Safari, Apple TV, and iOS devices. Check browser compatibility here. |

| Codec Support | HLS is rigid for codec support in its spec, only supporting H.264 and H.265 for video, and only AAC, MP3, AC3, E-AC3, or HE-AAC for audio. | There are no restrictions. Supports any video codec (including H.264, H.265, VP9, AV1, etc.) and any audio codec (including AAC, MP3, AC3, E-AC3, Vorbis, Opus, etc.) |

| Segmenting for ABR | HLS uses the MPEG-2 Transport Stream or MPEG-4 Part 14 format for its segments, with a fixed segment duration for video streams (6 seconds, with a buffer of 3 segments). | MPEG-DASH, on the other hand, uses the MPEG-4 Part 30 (ISO Base Media File Format) for its segments, with a variable segment duration (a range of 2-10 seconds, with no buffer requirement) that can be adjusted based on network conditions, providing a faster and smoother streaming experience, with lower latency and more frequent segment updates. |

| Latency | For HLS, the fixed and standardized segment length – a minimum of 6 seconds for each segment, with 3 segments to be loaded before playback can begin – leads to higher latency and slower segment updates which results in a higher time-to-first-frame, and a ‘live’ stream having a lag of 18-20 seconds. (Low Latency HLS aims to address this, however) | The DASH spec has no strict requirement for segment length, and allows for smaller segments to be sent over the network (typically 1-5 second long segments), which means lower latencies and first time-to-first-frame. DASH also uses chunked transfer encoding, allowing for segments to be sent as soon as available, with no minimum queuing requirements. |

| DRM (Digital Rights Management) | HLS uses Apple's FairPlay DRM system, which is only supported on Apple devices. | MPEG-DASH supports a range of DRM systems, including Microsoft PlayReady, Google Widevine, and Adobe Primetime, but unfortunately not FairPlay. |

Overall, while both HLS and MPEG-DASH are popular video streaming protocols, they differ in their origins, segment size, adaptive streaming capabilities, DRM support, and browser support. The choice between the two depends on a variety of factors, including the target audience, the types of devices used, and the desired level of control over the streaming experience.

HLS or MPEG-DASH: Which one should you use?

Deciding between HLS (HTTP Live Streaming) and MPEG-DASH (Dynamic Adaptive Streaming over HTTP) depends on several factors. Here are some key considerations to help make the decision:

- Audience: If the target audience includes a large number of iOS users, HLS is the better choice, as it is natively supported on Apple devices and browsers, with carefully curated settings for optimized playback across that entire product family. If iOS users are somehow not a concern for your use case, MPEG-DASH may be a better option by default given that it's an open standard with far fewer compliance requirements.

- Customization: If developer resources are limited, HLS may be a more practical choice, as it has better support for live streaming and is easier to implement with a “good enough” standard out-of-the-box. But MPEG-DASH being less strict with its implementation, and supporting a wide variety of codecs for both video and audio, makes it far more customizable. With more developer resources invested in MPEG-DASH, it theoretically offers a more extensible feature set and better control over the streaming experience.

- Features: While both are comparable in terms of features, MPEG-DASH is far more flexible, being codec agnostic for both video and audio. This is a big advantage as not being limited to just H.264 or H.265 makes higher-quality broadcasts at lower bitrates possible.

- Latency: If you require low-latency streaming, then DASH is a better option as it supports chunked transfer encoding which makes it the de-facto choice over HLS for this criteria. If CMAF is used, the gap narrows, but that might need additional implementation/development time.

- Cost: While both HLS and DASH require no royalty fees, HLS permits the use of only H.264 or H.265 for video, which have patents and need a licensing fee. With DASH being codec-agnostic, though, it becomes possible to just use something like VP8/VP9 as your video codec combined with Opus as the audio codec, and be completely free from any sort of royalty/licensing fees throughout your streaming pipeline.

Ultimately, the decision between HLS and MPEG-DASH depends on your specific needs and circumstances. Both protocols have their advantages and disadvantages, and the choice should be based on factors such as your target audience, available resources, desired streaming features, and budget.

Using ImageKit to Easily Implement HLS/DASH

ImageKit is a cloud-based image/video optimization and digital asset management platform that can streamline the entire processing pipeline involved with both HLS and DASH. Using ImageKit’s intuitive, developer-friendly URL-based API, you can auto-generate all the necessary variants and manifests required, dynamically, from a single source video stored on either your own cloud storage, or ImageKit’s Media Library.

ImageKit helps you implement HLS or DASH streams by handling the Server side of the adaptive stream pipeline automatically for you. This involves:

- Segmenting and manifest file generation,

- Generating different quality “renditions” of the chunks, and serving them,

- Making sure the stream is CDN cached (ImageKit comes with AWS CloudFront by default) and served geographically nearest to your viewers.

No complex pipeline or cloud infra configuration needed; all you have to do is request ABR for a given video via URL parameters, specify streaming profiles for the variants you want to be generated, and ImageKit will generate an HLS or DASH stream for you in seconds.

This example will request a manifest with 3 variants (at 480p, 720, and the source 1080p) of the source video to be generated:

For an Apple HLS manifest:

https://ik.imagekit.io/your_account_name/your-video.mp4/ik-master.m3u8?tr=sr-480_720_1080

For an MPEG-DASH manifest:

https://ik.imagekit.io/your_account_name/your-video.mp4/ik-master.mpd?tr=sr-480_720_1080

It’s that simple. On your end, all you have to do is use a Client-side player that supports HLS, MPEG-DASH, or preferably both (Video.js, for example) to embed this stream.

Conclusion

To sum it up, while both HLS and DASH offer efficient ways to deliver video content over the internet, there are key differences between them that can impact your choice depending on your specific needs. HLS is better suited for delivering content to Apple devices and has wider compatibility, while DASH offers greater flexibility and is preferred for low-latency use cases.

The implementation of both HLS and DASH are complex processes, however, that require considerable developer time and effort. A service like ImageKit cuts through all of that, handling both the storage of video, and its adaptive bitrate streaming, a cakewalk. ImageKit’s video API makes HLS/DASH stream generation a near real-time process, with no tedious pipeline management needed. Don’t get caught up in infrastructure and config, just focus on delivering a smooth, seamless video streaming experience to your viewers.